A century ago, what is today southern Oxfordshire sported apple orchards, green fields, and gallops for racehorses. Among its scattered settlements was Harwell, a place of half-timbered thatched cottages, with the Downs crossing the landscape a few miles away to the south. The peace of this classic English picture-postcard village was interrupted in 1939, when an airfield was built nearby to house Wellington bombers, and again in 1946, when the site was taken over by an organisation referred to by locals as “The Atomic”. This new organisation was in fact Britain’s secret Atomic Energy Research Establishment.

The purpose of this outfit – widely known simply as “Harwell” – was to make the UK the world leader in the development of atomic energy. Within a decade it had done so, and the importance of Harwell’s work and the scale of its achievement is perhaps best expressed by the USSR’s decision to spy on it, which it did via the infamous atomic spy, Klaus Fuchs, who kept Moscow informed of what was going on there. In 1950, Fuchs was exposed and imprisoned.

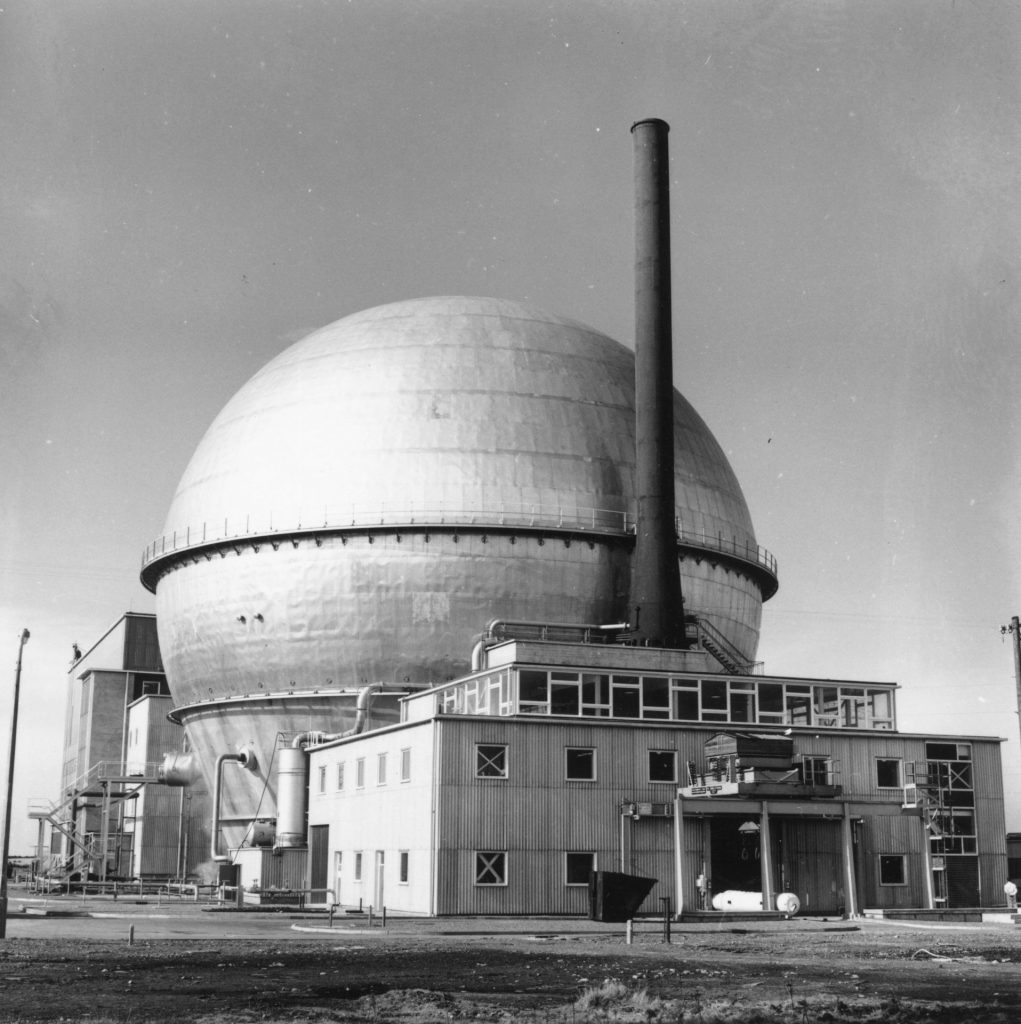

Harwell scientists and engineers designed and built the first experimental nuclear reactor on UK soil, which “went critical” on August 15 1947. It used the phenomenon of nuclear fission – the splitting of the atom – to generate energy. The know-how from this experimental device, and subsequent breakthroughs in reactor technology, led to bigger reactors and to the construction of Calder Hall. Based on the Cumbrian coast, this was the world’s first full-scale commercial nuclear power station, opened by Queen Elizabeth II in 1956. Britain had become the global leader in nuclear power.

The expertise at Harwell spawned other new technologies. The UK was the first nation to develop nuclear isotopes for medicine. Scientists at Harwell made advances in radiation protection, and in the chemical engineering of materials that could withstand the intense conditions within a nuclear reactor. New branches of science grew there and Harwell began to expand. Developments in high-energy nuclear physics led to the creation of the Rutherford Laboratory, adjacent to the Harwell campus, and as researchers turned their attention to the potential of nuclear fusion, this led to the opening of a laboratory operated by the UKAEA – United Kingdom Atomic Energy Authority – at nearby Culham.

The original Harwell campus is now multi-purpose. It has become a national centre for space research, for investigating the structures of biological molecules such as viruses, for computing research and for the study of the magnetic structures of materials. The focus on nuclear reactors is long gone. Somewhere along the way, the promise of Calder Hall and of UK leadership in nuclear energy fizzled out.

British nuclear energy now relies heavily on EDF (Électricité de France), a company that is 85% owned by the French government. France operates over 50 nuclear reactors, of which one site, Cattenom just north of Metz, produces almost as much power as Britain’s entire nuclear industry. Today nuclear power generates about one-sixth of the UK’s electricity, significantly down from its heyday in the last quarter of the 20th century. By contrast, in France, nuclear power is by far the largest source of energy.

Harwell’s birth was largely the result of a marriage between British and French scientists for the Manhattan Project during the second world war. How did the UK gain the lead, only to fall behind? And with interest now growing in the development of SMRs – small modular reactors – could the UK once again become a world leader in nuclear energy?

There would have been no nuclear age if we had not first discovered the atomic nucleus. Of all the bricks in nature’s construction kit, the nucleus is the most deeply hidden. In our daily affairs, its only visible presence is the sun, a nuclear furnace converting 600m tons of hydrogen into helium every second, through the process of nuclear fusion. This is when two hydrogen atoms fuse together to make a helium atom, a transformation that emits vast amounts of energy. The temperature in the sun’s centre where this alchemy takes place is about 15m degrees Celsius.

Being able to replicate such temperatures on earth and produce useful energy by fusion has been a tantalising goal that science has been pursuing, without success, for eight decades. Instead, for nearly a century, nuclear energy has been liberated from within the nuclei of existing heavy elements such as uranium, using the process of nuclear fission.

The story of nuclear power begins in France at the cusp of the 20th century, with the discovery of radioactivity and of elements such as radium, which can emit energy spontaneously for thousands of years. The baton was quickly handed over to the UK when, in Manchester and Cambridge, Ernest Rutherford and his team showed that the atom contained a compact, dense central nucleus made of protons and neutrons that is the source of this mysterious “nuclear” energy. It was in England, too, where Leo Szilard, the Hungarian-German physicist, patented his idea of the chain reaction, which would hold the key to liberating nuclear energy. In essence, if a neutron hits a nucleus and splits it in two there is the possibility this can both liberate nuclear energy and chip off one or two further neutrons. These “secondary neutrons” can hit other nuclei, repeating the fission process with the release of further neutrons, giving rise to an exponential growth in the release of energy.

That nuclear fission occurs when neutrons bombard uranium was proved experimentally in 1938. By the time the second world war had begun, teams in France and England had also established that a chain reaction can indeed happen as extra neutrons are liberated. This brought to life Szilard’s idea that fission, and a chain reaction of split atoms releasing particles that then split further atoms, could be the route to liberating nuclear energy. The race to do so began, and as there was also the potential to liberate energy explosively, in what became known as an atomic bomb, the work was secret.

That teams of allied scientists developed the weapon in the Manhattan Project is well known. Less familiar is that a team of primarily British and French scientists combined to work in Canada on the Anglo-Canadian arm of the project. Its goal was to develop the science of a nuclear reactor, an engine that would liberate nuclear energy under control for the benefit of society.

After the war, American strategy was to develop hegemony in nuclear weapons and the so-called special relationship became less special with the US McMahon Act, which ended technical cooperation with the US’s former allies. The British and the French made their primary strategy the development of nuclear energy through nuclear reactors. A tight secret, however, was that Britain also had its own independent atomic bomb project, and that atomic bombs made of plutonium rather than uranium were its main area of focus.

Plutonium is an element that does not occur naturally on Earth and must be made, one atom at a time, by irradiating uranium with neutrons in a nuclear reactor. In a nutshell, neutrons can either split uranium and liberate energy, or can attach themselves to a uranium nucleus, leading to the production of plutonium. So, while Harwell was developing the know-how to build nuclear reactors designed primarily to liberate atomic energy for peaceful uses, knowledge about the production of plutonium, its chemistry, metallurgy, and how to handle this dangerous product was also accumulating.

In the 1940s and 50s, Harwell drew in a generation of scientists and technicians who had grown up during the war. British and Commonwealth scientists from the Manhattan Project joined new graduates from the universities to work there. The likes of John Bell of Bell’s Theorem – a foundation of quantum theory – John Adams, the builder of particle accelerators and later director general of Cern – the European Organization for Nuclear Research in Geneva – and many others came to work at the laboratory. The Oxfordshire countryside hosted a gathering of scientific talent on a scale not seen since the Manhattan Project.

The Faustian bargain of plutonium led also to the reactor at Windscale, today known as Sellafield, which produced fuel for the UK’s independent atomic weapons programme. The Windscale plutonium reactor in Cumbria was a few hundred yards away from Calder Hall, but their different roles were a closely guarded secret. Only later did public suspicion grow that Calder Hall’s commercial power programme was a cover for the primary purpose of developing weapons-grade plutonium.

On October 8 1957, a technician at the plutonium reactor pulled a switch too soon, igniting a fire. By the time it was extinguished, three days later, winds had spread radioactive fallout across the UK and the rest of Europe. The demonstration of what a nuclear accident could do, combined with the Harold Macmillan government’s attempts to censor the news and play down events – plus further news of radioactive leaks – increased scepticism.

The scientific progress in nuclear technology that was made in Britain during the 50s has shaped the modern world. There are now more than 400 operable power reactors across the globe and 160 under construction or planned. But for history, the number could have been higher. The Three Mile Island disaster and the Chernobyl meltdown have made the public and politicians instinctively wary of nuclear energy. More recently, the 2011 Fukushima disaster had profound effects on European energy policy, particularly in Germany, which turned sharply against nuclear power in response, opting instead to increase its reliance on Russian gas.

And yet the total number of people killed directly or indirectly by nuclear energy (such as from radiation-induced cancer) are small when compared with those who have suffered death or serious illness as a result of conventional power production. Accidents in mining coal, transporting and processing the fuel, chronic bronchial conditions and worse from the power stations’ noxious emissions seem to have been noticed less by the public than the dangers linked to nuclear power.

Conventional nuclear reactors tend to be large and expensive, they take a long time to build and they have been notoriously plagued by cost overruns, as Britain is learning with the construction of Hinkley Point C in Somerset. This is spurring new government interest in the idea of small modular reactors (SMRs).

Small modular reactors are quick to build and their proponents advertise them as cheaper than the conventional variety because they are based on standardised modules, which can be assembled in a factory and shipped, whole, to the site. One of these reactors could power a town of 100,000 people or a large industrial site. Whereas a conventional nuclear power station feeds into a national grid, SMRs are ideal for small, isolated communities, particularly in developing economies.

The idea is not new, but it was only in 2020 that a prototype was launched in Russia. In the UK, Rolls-Royce is pushing hard to develop these small reactors.

Another of Harwell’s nuclear children is also attracting much public attention. Fusion, the power of the sun, was first explored at Harwell by researchers in the 1940s. Today fusion is perceived as a potentially limitless, clean source of energy, but though it might be a relatively straightforward process in terms of physics, fusion has proved very difficult to realise in reality. A further complication is that fusion uses heavy isotopes of hydrogen – deuterium and tritium. Tritium does not occur naturally and first has to be made, like plutonium, in a nuclear reactor. So not that clean, then.

The campus at Culham is the centre for Europe’s collaboration on building a realistic fusion power plant, and it has been running in Oxfordshire since 1983. Recently there has been much excitement generated by claims of a breakthrough in fusion energy. However, while that is true, the whole operation is still highly inefficient.

Fusion has great potential – in theory. Experiments suggest that Europe is moving towards the possibility of a reactor capable of creating realistic amounts of usable fusion energy, and the culmination of this work will be the construction of the International Thermonuclear Experimental Reactor, the first fusion test reactor. It is hoped it will begin operation in 2025. The reactor will be located in Cadarache, in France.

France now dominates European nuclear energy production, having left Britain far behind. Following the successful commissioning of Calder Hall, between 1956 and 1967 the UK built 27 reactors, which produced more nuclear energy than France or the US. By 1978, France and the UK were both producing the same amount of nuclear power, but by 1990 France had increased its capacity nine-fold, whereas in the UK it had merely doubled.

It’s notable perhaps that just as France was launching the largest nuclear construction programme in European history, North Sea oil and gas were coming online. UK government policy seems to have been driven by a belief that gas would make the nation self-sufficient and that the supply would last for ever. By the end of the 20th century neither the outgoing Conservative government of John Major nor the incoming Labour government of Tony Blair saw an economic case for building new nuclear power stations. The French, by contrast, saw nuclear energy as the means for self-sufficiency.

Today, the government has expressed a new enthusiasm for nuclear power, and Keir Starmer has also said that Labour is pro-nuclear. Whoever is in power after the next election, a focus on nuclear energy seems to be more likely. Perhaps then Britain could begin the long process of catching up with the French.

Frank Close is a particle physicist who is emeritus professor of physics at Oxford University and a Fellow of Exeter College, Oxford