As the Supreme Court and President Trump move ever-closer to direct confrontation – whether over illegal deportation, birthright citizenship or any one of a dozen other issues – a similar, if not quite so impactful, confrontation is shaping up in the digital world.

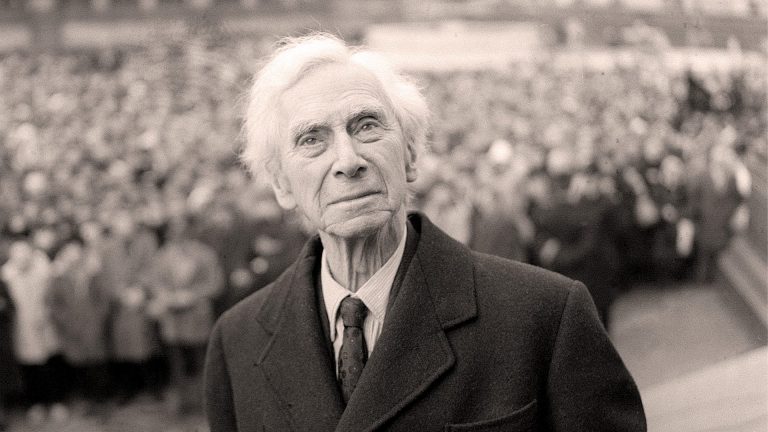

Mark Zuckerberg, a recent convert to Donald Trump’s world view on speech, wokeness, and who knows what else, set up an independent Oversight Board as a sort of supreme court for Meta’s moderating decisions across its social networks in 2020.

The 21-member board rules on the trickiest moderating decisions, is independent of Meta, and its rulings are supposed to be binding. The board’s decision on Wednesday to publish its first rulings since Zuckerberg dramatically changed his policies saying they had led to “too much content being censored”, marks a pivotal moment, opening up questions about whether the board still has any role to play in Meta’s future.

Together with rulings on individual cases, the board used a statement criticising Zuckerberg’s decision to dispense with factcheckers, writing that his “policy and enforcement changes were announced hastily, in a departure from regular procedure” and calling on Meta to assess “whether reducing its reliance on automated detection of policy violations could have uneven consequences globally, especially in countries experiencing current or recent crises, such as armed conflicts.”

It is a public dressing down of Zuckerberg that will raise eyebrows among his friends in Trumpworld – and they will also be looking carefully at three of the board’s rulings on controversial content, which they are likely to call a restriction of free speech.

In a move mirroring the politicking of real courts, the board has released its rulings in a bundle of 11 cases at once. In some cases, the board calls for content to stay up (or be reinstated). In others, it says content should have been pulled offline.

Read more: The book Zuckerberg wants to kill

The three cases most likely to be contentious concern the UK riots of last August. At the peak of the violence, which followed misinformation spread in the wake of a brutal stabbing attack on a dance class of young girls in Southport, many took to Facebook.

The board considered three posts. According to its summary, one “called for mosques… and buildings to be set on fire and ‘migrants,’ ‘terrorists’ and ‘scum’ to be shamed”. The other two involved AI images, one “of a giant man in a Union Jack T-shirt chasing smaller Muslim men in a menacing way” accompanied by a time and a place to gather. The final image comprised “four Muslim men running in front of the Houses of Parliament after a crying blond-haired toddler. One of the men waves a knife while a plane flies overhead towards Big Ben.”

All three posts were flagged to Facebook’s moderation teams at the time, and the automated system allowed all three posts to remain online. The posts were then later manually reviewed by Facebook, and were still ruled not to violate its rules.

However, the board unanimously found that all three posts should have been taken down as all three constituted incitements to violence. The board also criticised Facebook for not engaging in emergency procedures until after the rioting had all but finished in the UK.

The response to the violence across UK towns has become a flashpoint in UK/US relations, with Elon Musk repeatedly accusing Keir Starmer and the UK government of censoring legitimate right-wing voices, and vice-president JD Vance adopting the cause – even attempting to admonish Starmer over it in the Oval Office.

Meta’s Oversight Board – whose members are, like 95% of Meta’s users, overwhelmingly from outside the US – appearing to side with the UK government risks reigniting that row and putting Mark Zuckerberg back in the middle of it.

Some of the board’s other rulings are more likely to win approval in Trumpworld. Two rulings, both relating to anti-migrant speech in Europe, saw them overturning Facebook’s decisions to say content should be removed. And in two cases on trans discussion – one on bathroom bills and another on trans women competing in sports – the board agreed with Meta the content should stay up, despite including misgendering and other “intentionally provocative” content.

It similarly found some pro-apartheid content from South Africa should have stayed online, and criticised the removal of a video in which a drag queen referred to herself as a “faggy martyr”, noting slurs are allowed under Meta’s policies when they are self-referential and used to reclaim the words.

By packaging its decisions in this way, the board is clearly trying to position itself as something more than just a so-called censorship panel. Yes, it sometimes says content should be taken down and publishes recommendations that Facebook act more swiftly and stringently. But it is clearly also trying to show that on occasion, it also pushes Meta to reinstate content or keep it online.

On one level, all of this might feel quite pointless, not least because the Oversight Board feels like a relic of another era – the era in which big tech wanted to look responsible in front of regulators, and the era in which Nick Clegg was the face of Meta’s public relations. Both of those are now over, but the Oversight Board is still there – with its funding contractually guaranteed for another year or two. It is the only remotely independent non-state regulator for social media.

Meta can do three things with the Oversight Board’s decisions. Its contractual agreement requires it to follow the board’s instructions on individual bits of content – so if it didn’t take down the three UK riots posts, for example, it would be in an obvious breach of its agreement with the Board, surely rendering the position of its members untenable.

But the impact of the Board is supposed to be using these difficult cases to shape Meta’s wider policies, and it has made numerous recommendations alongside these rulings. At one point, Meta would have made a point of integrating at least a number of these into its policies going forward.

That leaves an obvious third route for Meta with these decisions and any remaining for the duration of the Board’s term – it could follow its rules on individual content to the letter, with no fanfare, and do absolutely nothing else, rendering the whole process extremely time-consuming and entirely dispiriting for everyone.

The Oversight Board was never embraced by Meta’s critics, who saw it as a mere nod towards respectability. But if those people put their trust in government regulation of social media, they must surely be disappointed with the results so far: the US will not take any action to make social media safer under Trump.

The EU talks a big game but delivers little, and its first fines under the vaunted Digital Services Act – €500m for Apple and €200m to Meta – barely qualify as a slap on the wrist. Meanwhile, Elon Musk’s X is an almost entirely unmoderated cesspit and has operated largely untouched since 2022.

Meta’s Oversight Board was an attempt at something which wasn’t just big tech doing what it wanted, but wasn’t just government stepping in. It was, in its way, a genuine experiment to find a new model as to how to govern the online public square.

The Oversight Board’s rulings today, and Meta’s response to them, might be the moment where we see its results.